Team Name

Team IDK

Timeline

Summer 2021 – Fall 2021

Students

- Hamilton Nguyen- BSSE- Application Developer- Software Testing/ Design/UR Controller.

- Jaymee Yi – BSCS- Application Developer- Software Design/UR Effector.

- Marvin Wellington- BSCS- Application Developer- Embedded Machine Learning/Computer Vision.

- Suhail Safder – Hardware Engineer- BSCE-Electrical/Computer Vision.

- Thomas Vu – Hardware Engineer/Scrum Master- BSCE-Electrical/Computer Vision.

- Minh “Jerry” Tram- Technical Advisor- MSCS- Software/Hardware Consulting.

Sponsor

- UTA CSE DEPARTMENT AT $800.00.

- INDIVIDUAL – CHI LEE AT $100.00.

- UR5 ROBOTIC ARM PROVIDED BY CSE SENIOR DESIGN LAB.

- MS-7B83 DESKTOP COMPUTER (MICRO INTERNATIONAL, CO.) PROVIDED BY CSE SENIOR DESIGN LAB.

- NIVIDIA CORPORATION TU104 [GEFORCE RTX2080] PROVIDED BY CSE SENIOR DESIGN LAB.

Abstract

The Universal Robot 5 is a robotic system that allow users to automate repetitive tasks for a specific need and purpose. The purpose of the Universal Robot 5 in this project is to implement, design, and develop an efficient algorithm that automate repetitive tasks in various scenarios. With the aid of Open-Source Computer Vision (OpenCV), the UR5 will have a capability to identify and sort objects based on their color and shape. The Intended audiences are professionals that resided in technological/manufacturing industry and academia researchers. This UR5 project has the potential being a reliable asset for professionals in the manufacturing industry that can maximize productivity and decrease operational cost.

Background

Autonomous technology has been around since the inception of robots in the early 20th century and was exploited to solve engineering challenges in various fields. How autonomous robots perceive and make decisions are relied on what it exposed to its surrounding environment. According to the International Federation of Robotics, robotic growth is expected to increase 12% a year from 2020 – 2022. Autonomous robots provide an advantage in terms of robust and ruggedness when it comes to speed and accuracy in its routine operations. For one example, the sorting process, which is a challenging task in industrial robotics. There are three key concepts when it comes to autonomous robots: perception, decision, and actuation. For users, the perception can be performed by using their five senses such as hear, see, smell, touch, and feel. In terms of an analogy, For robot’s perception is to electronic sensors. Human decisions are made with their frontal lobe of their cerebrum, while robots make decisions based on their procedural or object-oriented algorithms with data it receives from its sensors. People move with their muscles while robots have several types of actuators, effectors and motors to execute specific movements. By exploiting the three key concepts, we can develop and deploy a software system that utilized universal robotic products to achieve a simplified process of identification, classification, and sorting objects.

Project Requirements

- Customer Requirements

- Identify Object to Pick and Place

- Classify by Shape

- Classify by Color

- Autonomous Identification

- Autonomous Movement

- Packaging Requirements

- Universal Robots UR5

- Intel RealSense Depth Camera D415

- Software Program/Source Code

- Performance Requirements

- Camera

- UR5

- Vacuum Pump

System Overview

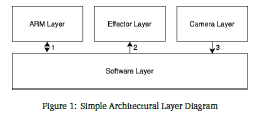

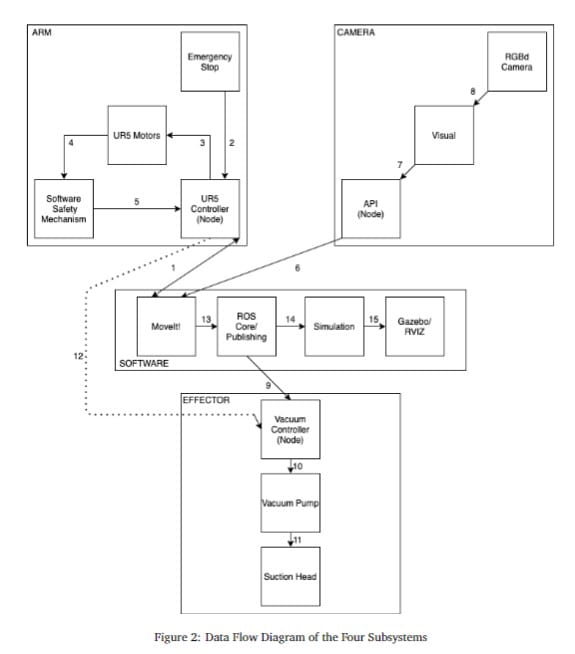

The Software Layer shall be called the Master Layer as the main form of communication with Arm, Effector, Camera layer as Slave Layers. Upon source packages execution (roslaunch or rosrun), the Camera Layer will publish RGB Data. The Software Layer will be subscribed simultaneously to the constant live feed of the Camera Layer, then it processed the data its obtained, and move the Arm and Effector accordingly to its processed data without the need of external intervention from users.

Results

Our group successfully implemented an algorithm to process the raw data from the camera in order to identify the center of objects for use in template matching as well as plan the movement of the UR5 to the object from a designated home position then pick up the piece via suction from a vacuum nozzle effector.

The next steps we hope to accomplish would be optimizing the workspace of the UR5 as well as the image quality/data obtained from the camera. This would allow us to incorporate machine learning algorithms in order to develop a larger database for more accurate classification of template/object matching.

Future Work

- Occlusion Handling.

- Camera/Image Distortion.

- Template/Object Matching.

- Optimization of Robotic Workspace.

- Optimization of Image Quality.

- Object classification of complex geometric and non-geometric objects.

- Sorting strategies.

- Implementing additional camera sensors.

Project Files

Project Charter (link)

System Requirements Specification (link)

Architectural Design Specification (link)

Detailed Design Specification (link)

References

- Intel. Intel RealSense Depth Camera D415, July 2021.

- Universal Robots. Universal Robots Service Manual v3.2.7, July 2021.

- Universal Robots. Universal Robots User Manual v1.6, July 2021.

- Universal Robots. UR5 Robot, July 2021.

- Olympus Technologies. Universal Robots, July 2021.

- Garth Zeglin. UR5 Robot Arm: User Guide, July 2021.

- Cody Van Cleve. ASU Polytechnic-Senior Design Project, July 2021.

- FANUC America Corporation. Recycling Robots-Companies Turn to Robots to Help Sort Recyclables

Waste – Waste Robotics, July 2021. - Boston Dynamics. Spot Arm, 2021.

- Boston Dynamics. Spot’s Got an Arm!, July 2021.

- ACIN-Advanced Mechatronic Systems Group. Screw Sorting Robot with Machine Vision, July 2021.

- Adam Savage’s Tested. The Animatronic Robot Designs of Mark Setrakian!