Team Name

IGVC Computer Vision Team 2024

Timeline

Fall 2023 – Spring 2024

Students

- Brandon Bowles

- James Caetano, Jr.

- Talha Nayyar

- Sameer Dayani

- William Perriman

Sponsor

Dr. Christopher D. McMurrough

Associate Professor of Instruction at University of Texas – Arlington

Computer Science and Engineering Department

Abstract

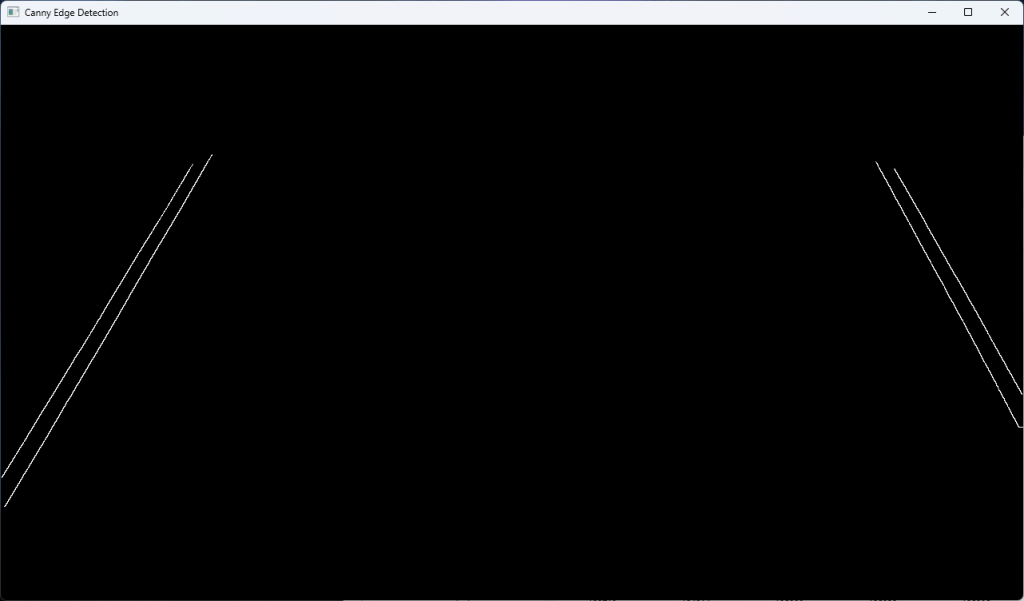

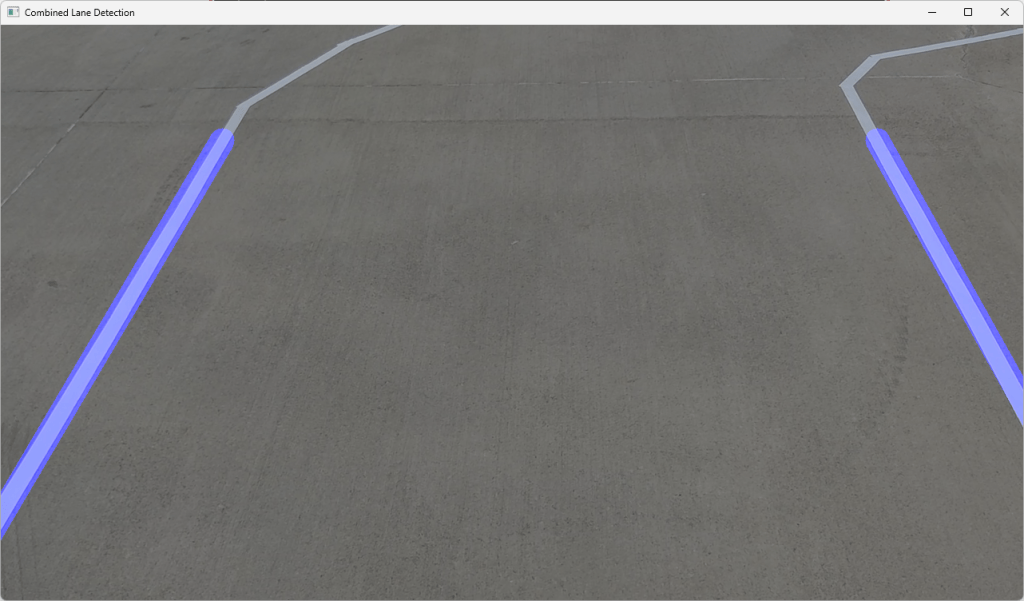

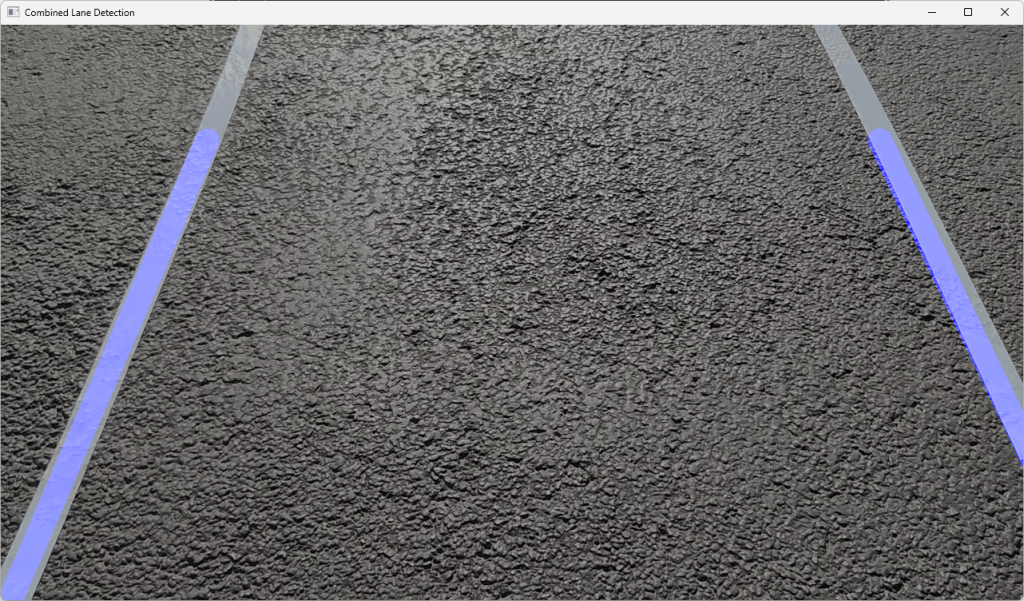

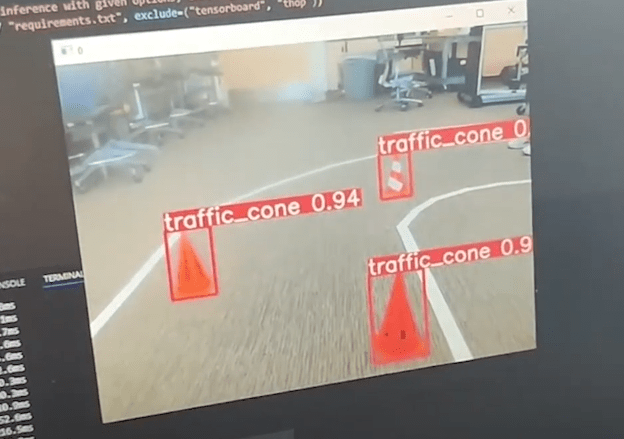

The project aims to develop a modular computer vision system for autonomous vehicles, specifically for the IGVC (Intelligent Ground Vehicle Competition) vehicle representing The University of Texas at Arlington in the competition. Utilizing advanced computer vision and LIDAR technologies, this project is a scalable and specific computer vision system attuned to the IGVC competition requirements and test course, allowing navigation through the course by accurately identifying and avoiding obstacles, whilst staying on track using lane detection. Lane detection utilizing canny edge detection, filtering noise through erosion and dilation, and finding the edge using gaussian blur. For object recognition, YOLOv5 was used due to its performance and accuracy through a training model. Both lane detection and object recognition outputs a dot matrix as depth data to the path planning team.

Background

The Intelligent Ground Vehicle Competition (IGVC) is a yearly engineering competition that tasks teams with designing and creating a robot that can navigate a course without hitting any obstacles. We are one of three teams working on UTA’s vehicle this iteration. Our focus is on the lane and object detection side of things. Competing and innovating for this competition is necessary since autonomous vehicles have the potential to bring about unprecedented leaps forward in road and vehicle safety, (McGinness) According to tests conducted by AAA in June 2022, the company reported that the future of autonomous vehicles is a long way off as their tests found that safety features designed to prevent crashes failed in multiple situations. That is why investment and support in this project and its development efforts are paramount. Implementation of monocular camera can reduce overall system cost and estimate object distance more accurately. The restrictions to this design are reliant on FOV, mounted height and stabilization (Pidurkar et al.)

Project Requirements

- Object Detection and Recognition

- Autonomous Lane Detection

- Noise Robustness and Reduction

- Object Tracking

- Camera Calibration

- Outputting Data to Path Planning

- Handling Obstacles Obstructing Lane Views

- Real-Time Performance

- Identifying Optimal Camera Height and FOV (Field of View)

- Documentation and Testing

System Overview

Results

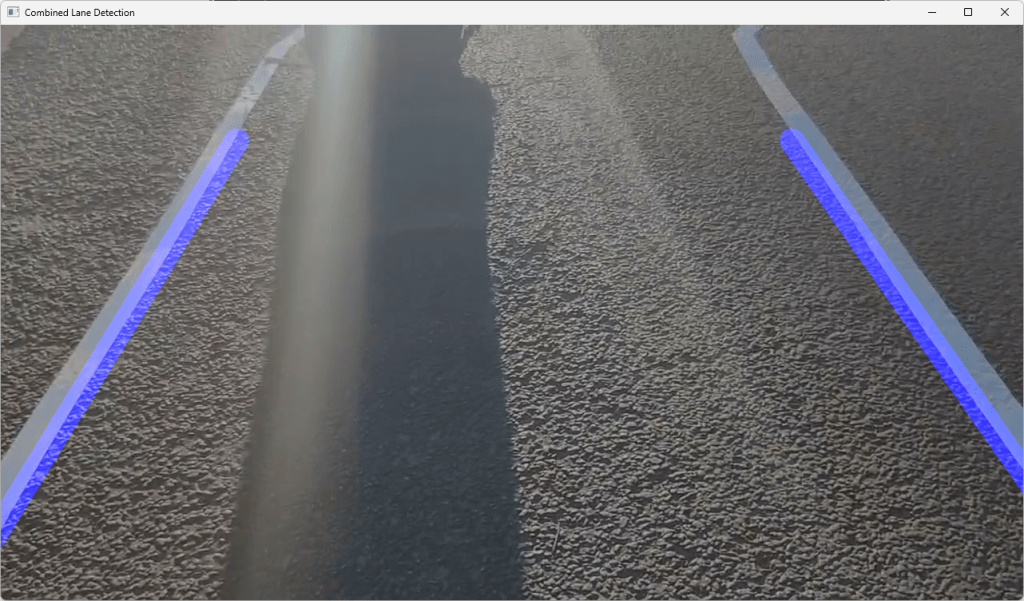

Utilizing canny edge detection, Hough transforms and YOLOv5, our team has developed an efficient and accurate lane detection solution. It can find and report the location of both a single lane and two lanes, including a distance measure accurate to within roughly 5 inches on a flat surface.

Edge Detection Results

Lane Detection Results

Object Detection Results

Future Work

Continuing the original plan, integrating the lane and object detection with the pathfinding team to drive the as-yet unfinished autonomous vehicle and entering the IGVC competition. For integration there will be 2 raspberry Pi’s and a laptop controller that will all be in communication.

Project Files

System Requirements Specification (link)

Architectural Design Specification (link)

Detailed Design Specification (link)

References

1. Chris McGinness. Fully autonomous vehicles still a long way off, report finds, 2022

2. Ashish Pidurkar, Ranjit Sadakale, and A.K. Prakash. Monocular camera-based computer

vision system for cost effective autonomous vehicle. In 2019 10th International Conference

on Computing, Communication and Networking Technologies (ICCCNT), pages 1–5, 2019

IGVC – Computer Vision