Timeline

Summer – Fall 2019

Students

- Nhan Le

- Minh Tram

- Nhan Lam

- Hunter Nghiem

- David Chavez

Abstract

To create a gimbal that provides a live feed of surrounding area to a Virtual Reality headset. It gives customers access to a live feed to area that is inaccessible or difficult to access by humans. This allows safe access to places that would be hazardous to humans such as exploring a high radiation area, viewing the undercarriage of a car in a checkpoint for any bombs or restricted components.

Background

There are many places around the world that are not physically reachable by human. These places can include unexplored areas around the world, to man-made disaster areas such as Chernobyl, or the Fukushima nuclear plant in its March 2011 incident. Current solutions which include three dimensional mapping can provide good ideas of how certain areas look, but does not provide enough details of the environment. When it comes to border security, the current solution can be using a piece of glass attached to a pole to check for possible harmful materials underneath vehicles. Even though it is a direct live feed, it is an outdated solution and might not provide enough coverage and take longer to cover certain areas. Other today solutions use robotics and computer vision to provide live feeds to operator. However, some of these camera solutions do not have the quick flexibility when it comes to providing quick video feeds of the environment. Today’s autonomous robots might not be smart enough and will occasionally need operator manual override.

Project Requirements

- The camera gimbals system shall synchronize with the user’s head movements using VR headset as a reference.

- Camera shall provide live video feed into VR headset display with minimal delay.

- Live video feed shall be in color, has adequate resolution with minimal input delay, and running at least sixty frames per second

- The time delay between user headset and the camera movement shall be minimal

System Overview

Currently, there are three possible implementations for the project under consideration. These options are: single camera on omnidirectional motorized gimbal that translate VR headset motion to synchronous camera motion, 4-6 cameras pointing in all direction of FOV rendering a “pseudo” realistic world, or 2-3 cameras, 1 acting as the live head motion translation and 1 or 2 cameras updating the surrounding by spinning around an axis and delta-update the video feed. For the single camera system, the camera will be mounted on a rigid, rotating platform that can cover 360◦on the horizontal plane and up to 100◦vertically to simulate commonly used human head motions. The platform will be actuated using a system of stepper motors in combination with mechanical transmission and end-stops initially to provide smooth, precise, and stable camera motion. All communication from the single camera will be feed directly to a computer, which process the video, feed it to the VR headset, and transfer the headset motion back to the motor controllers to execute the movement. For the 4-6 stationary camera system, the VR headset motion will be virtualized and translated to a viewport in a stereo video hemisphere constructed by the set of cameras. Essentially, where the user is looking at in the virtual world will be the only real-time frame. All others area will be set to passive delta updating (change detection) to simulate a live feed. What this means is that what the user see is a virtualized “pseudo-real-time” which looks identical to the view the user have in the one camera system, but eliminating the motion control section and therefore drastically improve response time and image stability. The last solution is a hybrid of the first two solutions, with one mounted camera that simulates head motion and one do the passive updating by rotating around and delta updating the scene frame by frame at a higher rotation speed. This option was considered as a compromise to ensure bandwidth for live viewing and faster real-time video rendering rate since part of the scene is already rendered with the help of a secondary camera.

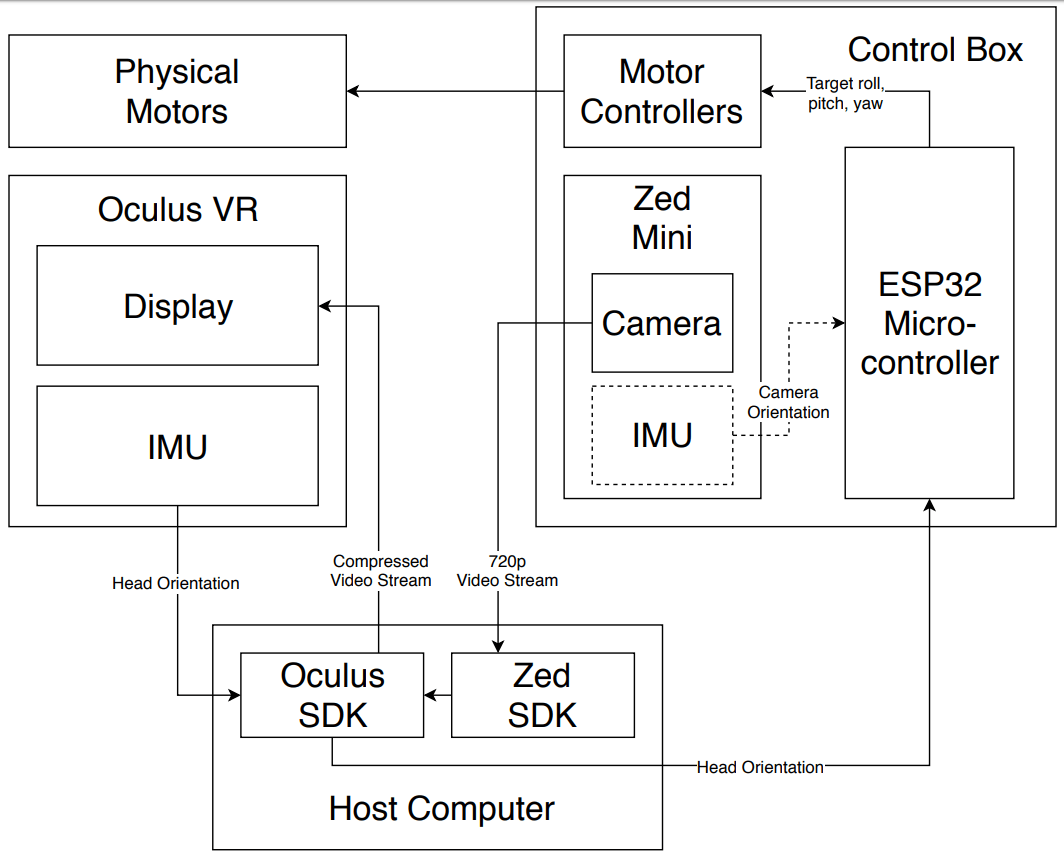

Ideally, this is how the data should be handled and how the system should operate:

Results

- The gimbal system can move synchronously with the VR headset’s movement.

- Camera is providing live video feed into VR headset in High Definition resolution at 60 frames per second.

- To reduce the physical movement complexity, one rotation axis was taken out. The gimbal system currently support pitch and yaw movements.

- On start-up, the gimbal system can adjust itself (homing) to match the current direction of the VR headset with a small offset differences.

Future Work

- Adding the 3rd rotation axis (roll)

- Miniaturize the gimbal system

- Send communication data over

wireless - Improve live video resolution

- Reduce motion sickness to users

- Increase range of movement on

gimbal system

Project Files

ROB_System_Requirement_Specifications